National Science Day 2024

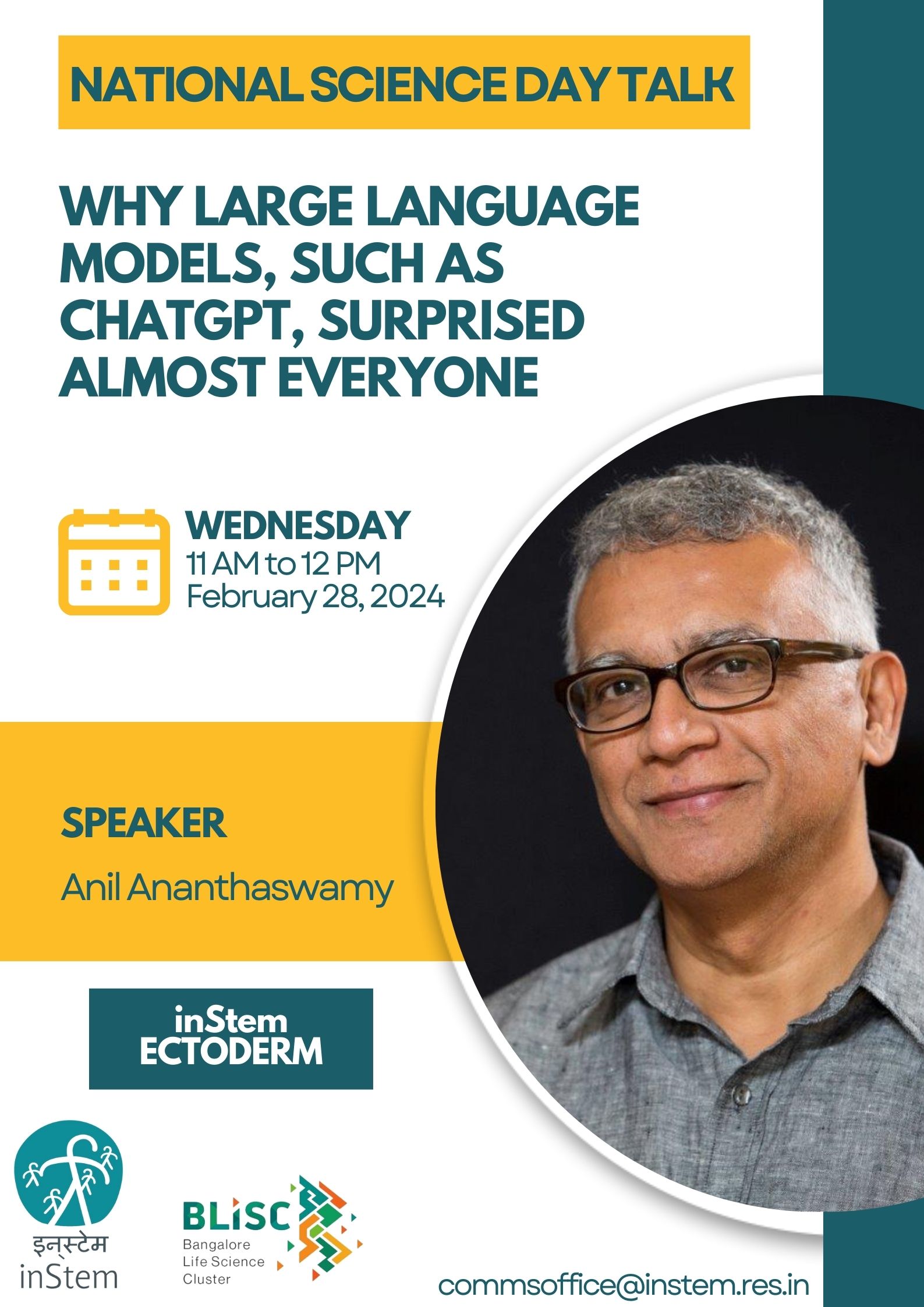

Why Large Language Models, such as ChatGPT, Surprised Almost Everyone

- Anil Ananthaswamy

It's an understatement to say that 2022 was an unprecedented year for machine learning. Large Language Models (LLMs) came of age. Suddenly, ChatGPT (a chatbot based on GPT-3.5) was demonstrating behaviors that did not exist in its predecessors. But the main difference between these models was their size: GPT-3.5 was an order-of-magnitude larger and was trained on significantly greater data. The training process, however, was exactly the same for the smaller and larger models. Nothing in the training process suggested that an LLM should show abilities that did not exist in the smaller brethren. In this talk, Anil Ananthaswamy will talk about the basics of large language models, the observation that scaling up LLMs--whether in their size or in the amount of data they are trained on--seems to endow them with new "emergent" abilities, and why it's hard to make sense of these abilities simply by looking at the training process.